LLM Analysis Which One Is The Best?

This post refreshes the LLM landscape as of Dec 2025. Quick takes on who’s best at what, how to choose, and which open-weight models are worth self-hosting.

This post refreshes the LLM landscape as of Dec 2025. Quick takes on who’s best at what, how to choose, and which open-weight models are worth self-hosting.

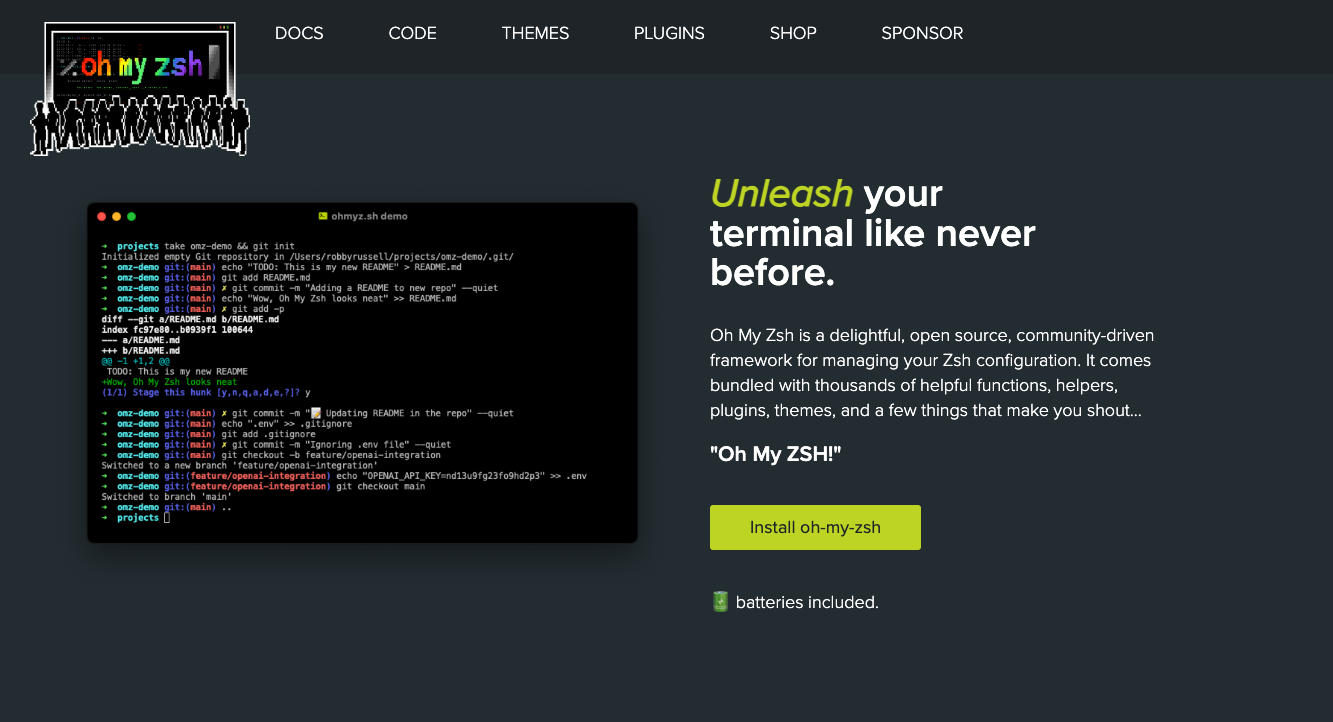

I've been using Oh My Zsh for a while now, and recently I decided to supercharge my terminal setup with some modern CLI tools that have become essential to my daily workflow. In this post, I'll walk you through installing and configuring four powerful tools: eza, bat, delta, and zoxide - each replacing traditional Unix commands with smarter, more feature-rich alternatives.

I've been exploring various AI coding assistants lately, and I recently discovered Claude Code with GLM integration from Z.AI. After setting it up and using it for a few weeks, I'm excited to share my experience and walk you through the complete setup process. In this post, I'll show you how to leverage the latest GLM-4.6 models for your coding projects.

Managing Kubernetes clusters efficiently often requires more than just kubectl basics. Over time, engineers develop and collect scripts to automate, debug, and monitor their clusters. This post curates some of the most useful scripts and command-line tricks from the open-source eldada/kubernetes-scripts repository, with practical explanations and usage tips for SREs and DevOps engineers.

In today's fast-evolving tech landscape, continuous learning is essential for anyone in Site Reliability Engineering (SRE), DevOps, or Machine Learning/Data Analytics (ML/DA). This post outlines my structured learning journey, highlighting key knowledge areas, practical skills, and resources that have helped me grow as an engineer. Whether you're just starting or looking to deepen your expertise, I hope this guide provides a valuable roadmap.

This post i will try to setting up a guideline om how to deploy Dify AI in cloudflare workers. Dify AI is helpful AI Platform workbench that can support multiple task and make work more flawless

This post i will try to setting up a guideline outlines a structured, methodical approach to troubleshooting performance issues in containerized or server-based environments. It emphasizes a hypothesis-driven process, guided by key performance metrics, to accurately diagnose and resolve problems.

This post i will try to getting started on how to do our own personalized self host. what resource need to be prepared and guide on how to do it easily

This post i will try on multiple available terraform modules to automate multiple things in AWS

This post will cover introduction about cloud feature especially on amazon web services, what is it use case, benefit and comparison with other cloud provider